主要涉及到的目录是

- android / platform / frameworks / native / master / . / libs

- android / platform / frameworks / base / master / . / core / jni

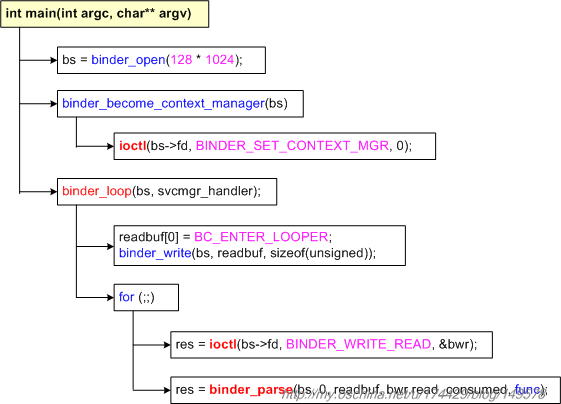

Native端 ServiceManager 启动过程

原本列了一大串,后来发现详细内容还不如直接看这个把。

Binder系列3—启动ServiceManager

提炼出关键步骤就是

binder_open(driver, 128*1024),内部调用为 :- 打开/dev/binder文件:

bs->fd = open("/dev/binder", O_RDWR);,这个方法会进入到binder驱动程序,保存线程上下文信息,生成多个红黑树,用于保存服务端binder实体信息,客户端binder引用信息等; - 记下映射内存大小:

bs->mapsize = mapsize; - 建立128K内存映射:

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);,这个方法也会进入到binder驱动程序,使用进程虚拟地址空间和内核虚拟地址空间来映射同一个物理页面。这样,进程和内核之间就可以减少一次内存拷贝了,提到了进程间通信效率。举个例子如,Client要将一块内存数据传递给Server,一般的做法是,Client将这块数据从它的进程空间拷贝到内核空间中,然后内核再将这个数据从内核空间拷贝到Server的进程空间,这样,Server就可以访问这个数据了。但是在这种方法中,执行了两次内存拷贝操作,而采用我们上面提到的方法,只需要把Client进程空间的数据拷贝一次到内核空间,然后Server与内核共享这个数据就可以了,整个过程只需要执行一次内存拷贝,提高了效率。

这里用到一个数据结构 binder_state 把它们存起来

1

2

3

4

5

6struct binder_state

{

int fd; //驱动的文件描述符

void *mapped; //映射内存的起始地址

unsigned mapsize; //映射内存的大小

};- 打开/dev/binder文件:

通知Binder驱动程序它是守护进程:

1

2

3

4int binder_become_context_manager(struct binder_state *bs)

{

return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0);

}这里通过调用ioctl文件操作函数来通知Binder驱动程序自己是守护进程,cmd是BINDER_SET_CONTEXT_MGR,没有参数。在驱动程序内部的调用为:

- 初始化

binder_context_mgr_uid为current->cred->euid,binder_context_mgr_uid表示Service Manager守护进程的uid,这样使当前线程成为Binder机制的守护进程 - 通过

binder_new_node()来创建binder实体,binder_context_mgr_node用来表示Service Manager的binder实体

- 初始化

进入循环等待请求的到来:

binder_loop(bs, svcmgr_handler),并且使用svcmgr_handler函数来处理binder请求,没有请求时,

在binder_ioctl()函数中通过wait_event_interruptible_exclusive()阻塞

Native端获取 ServiceManager 远程接口的过程

Service Manager在Binder机制中既充当守护进程的角色,同时它也充当着Server角色,然而它又与一般的Server不一样。

对于普通的Server来说,Client如果想要获得Server的远程接口,那么必须通过Service Manager远程接口提供的getService接口来获得,getService是一个使用Binder机制来进行进程间通信的过程(需要通过名字查询得到相应的Server端binder实体对应的binder引用句柄,用于生成BpBinder);

而对于Service Manager这个Server来说,Client如果想要获得Service Manager远程接口,却不必通过进程间通信机制来获得,因为Service Manager远程接口是一个特殊的Binder引用,它的引用句柄一定是0。

获取Service Manager远程接口的函数是

1 | sp<IServiceManager> defaultServiceManager() |

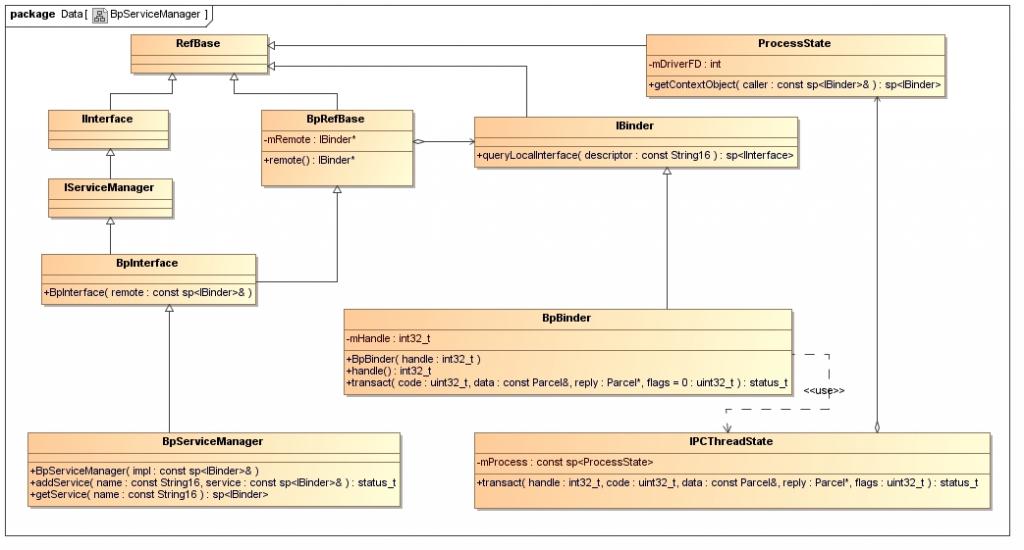

一个相关的类继承关系图:

从图中可以看到:

BpServiceManager类继承了BpInterface

类,BpInterface是个模板类,又继承了IServiceManager和BpRefBase,它的构造函数需要一个IBinder类 1

2

3

4

5

6

7

8

9template<typename INTERFACE>

class BpInterface : public INTERFACE, public BpRefBase

{

public:

BpInterface(const sp<IBinder>& remote);

protected:

virtual IBinder* onAsBinder();

};IServiceManager类继承了IInterface类,而IInterface类和BpRefBase类又继承了RefBase类。在BpRefBase类中,有一个成员变量mRemote,它的类型是IBinder*,实现类为BpBinder,它表示一个Binder引用,引用句柄值保存在BpBinder类的mHandle成员变量中。因此IServiceManager也有mRemote的指针,可以和binder通信

创建Service Manager远程接口主要是下面语句,主要是三个步骤:

1 | gDefaultServiceManager = interface_cast<IServiceManager>(ProcessState::self()->getContextObject(NULL)); |

首先是

ProcessState::self():1

2

3

4

5

6

7

8sp<ProcessState> ProcessState::self()

{

if (gProcess != NULL) return gProcess;

AutoMutex _l(gProcessMutex);

if (gProcess == NULL) gProcess = new ProcessState;

return gProcess;

}这里仅仅是创建一个单例,它的构造函数

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30ProcessState::ProcessState()

: mDriverFD(open_driver())

, mVMStart(MAP_FAILED)

, mManagesContexts(false)

, mBinderContextCheckFunc(NULL)

, mBinderContextUserData(NULL)

, mThreadPoolStarted(false)

, mThreadPoolSeq(1)

{

if (mDriverFD >= 0) {

// XXX Ideally, there should be a specific define for whether we

// have mmap (or whether we could possibly have the kernel module

// availabla).

// mmap the binder, providing a chunk of virtual address space to receive transactions.

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

// *sigh*

LOGE("Using /dev/binder failed: unable to mmap transaction memory.\n");

close(mDriverFD);

mDriverFD = -1;

}

mDriverFD = -1;

}

if (mDriverFD < 0) {

// Need to run without the driver, starting our own thread pool.

}

}主要做了三件事:

- 调用open(),打开/dev/binder驱动设备;

- 再利用mmap(),创建大小为BINDER_VM_SIZE(1M-8K)的内存地址空间;

- 设定当前进程最大的最大并发Binder线程个数为16。

ProcessState::self()->getContextObject(NULL),这个函数的返回值,是一个句柄值为0的Binder引用,即BpBinder:new BpBinder(0)interface_cast<IServiceManager>()函数,这是一个模板函数,最终调用到了IServiceManager::asInterface():1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18template<typename INTERFACE>

inline sp<INTERFACE> interface_cast(const sp<IBinder>& obj)

{

return INTERFACE::asInterface(obj);

}

android::sp<IServiceManager> IServiceManager::asInterface(const android::sp<android::IBinder>& obj)

{

android::sp<IServiceManager> intr;

if (obj != NULL) {

intr = static_cast<IServiceManager*>(

obj->queryLocalInterface(IServiceManager::descriptor).get());

if (intr == NULL) {

intr = new BpServiceManager(obj);

}

}

return intr;

}因此实际的过程为:

1 | gDefaultServiceManager = new BpServiceManager(new BpBinder(0)); |

即获取的Service Manager远程接口,本质上是一个BpServiceManager,包含了一个句柄值为0的Binder引用,这个过程不涉及到跨进程调用

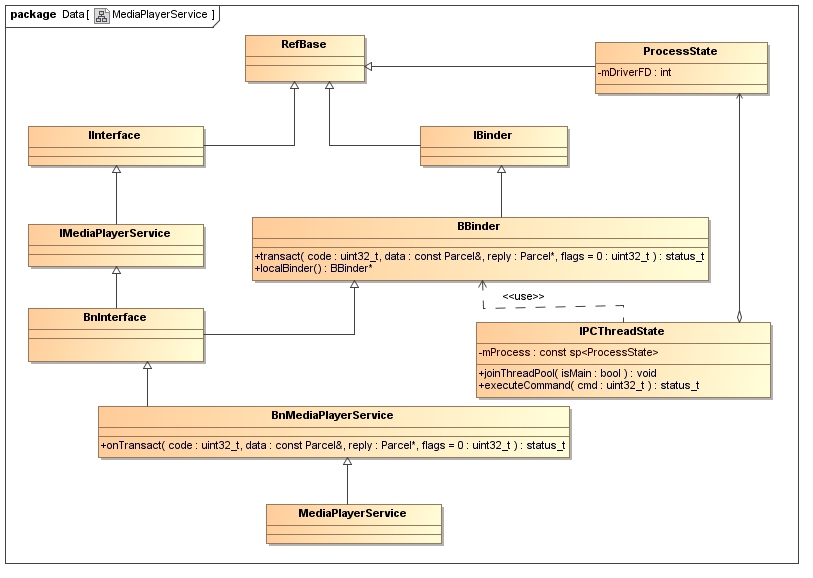

Native端普通 Service 的初始化,注册

在上一节里面我们看到了 ServiceManager 的远程接口端的类图,实际上是一个BpServiceManager。这里我们以MediaPlayerService为例,看一下服务端的类图

可以看到,这个结构和Bp端很类似,不同的地方在于,MediaPlayerService继承于BnMediaPlayerService,而BnMediaPlayerService继承于BnInterface,BnInterface继承了BBinder接口, IBinder 的实现类则是BBinder其他部分则是类似的

1 | template<typename INTERFACE> |

MediaPlayerService的启动过程:

1 | int main(int argc, char** argv) |

主要步骤是:

sp<ProcessState> proc(ProcessState::self());, 在上一节已经分析过这句过程。主要是打开binder设备和映射内存sp<IServiceManager> sm = defaultServiceManager(),获取ServiceManager接口,上一节也已经分析过MediaPlayerService::instantiate():1

2

3

4void MediaPlayerService::instantiate() {

defaultServiceManager()->addService(

String16("media.player"), new MediaPlayerService());

}addService函数传入了两个参数,一个是服务的名字,一个是服务的实现类。这里首先看一下

defaultServiceManager返回的BpServiceManager定义:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29class BpServiceManager : public BpInterface<IServiceManager>

{

public:

BpServiceManager(const sp<IBinder>& impl)

: BpInterface<IServiceManager>(impl)

{

}

......

virtual status_t addService(const String16& name, const sp<IBinder>& service)

{

Parcel data, reply;

//IServiceManager::getInterfaceDescriptor()返回来的是一个字符串,即"android.os.IServiceManager",写入一个字符串

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

// name 即 "media.player",写入一个字符串

data.writeString16(name);

data.writeStrongBinder(service);

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode()

}

......

};

status_t Parcel::writeStrongBinder(const sp<IBinder>& val)

{

return flatten_binder(ProcessState::self(), val, this);

}这里

flatten_binder(ProcessState::self(), val, this)会把传入进来的 IBinder实现类service转成一个flat_binder_object对象,然后序列化到Parcel 里面 。 每一个Binder实体或者引用,都通过 flat_binder_object 来表示,成员变量里面 binder表示这是一个Binder实体,handle表示这是一个Binder引用,当这是一个Binder实体时,cookie才有意义,表示附加数据,由进程自己解释。:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29status_t flatten_binder(const sp<ProcessState>& proc,const sp<IBinder>& binder, Parcel* out)

{

flat_binder_object obj;

obj.flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

if (binder != NULL) {

IBinder *local = binder->localBinder();

if (!local) {

BpBinder *proxy = binder->remoteBinder();

if (proxy == NULL) {

LOGE("null proxy");

}

const int32_t handle = proxy ? proxy->handle() : 0;

obj.type = BINDER_TYPE_HANDLE;

obj.handle = handle;

obj.cookie = NULL;

} else { //此次会进入到这里,因为是BBinder,服务实体

obj.type = BINDER_TYPE_BINDER;

obj.binder = local->getWeakRefs();

obj.cookie = local;

}

} else {

obj.type = BINDER_TYPE_BINDER;

obj.binder = NULL;

obj.cookie = NULL;

}

return finish_flatten_binder(binder, obj, out); //序列化,把flat_binder_object写入out。

}writeStrongBinder的过程,在这里就是,把parcel data 转成 flat_binder_object ,然后写入到parcel out。回到上面去,

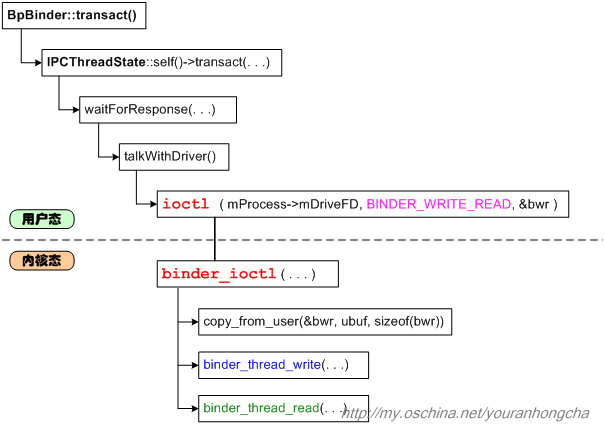

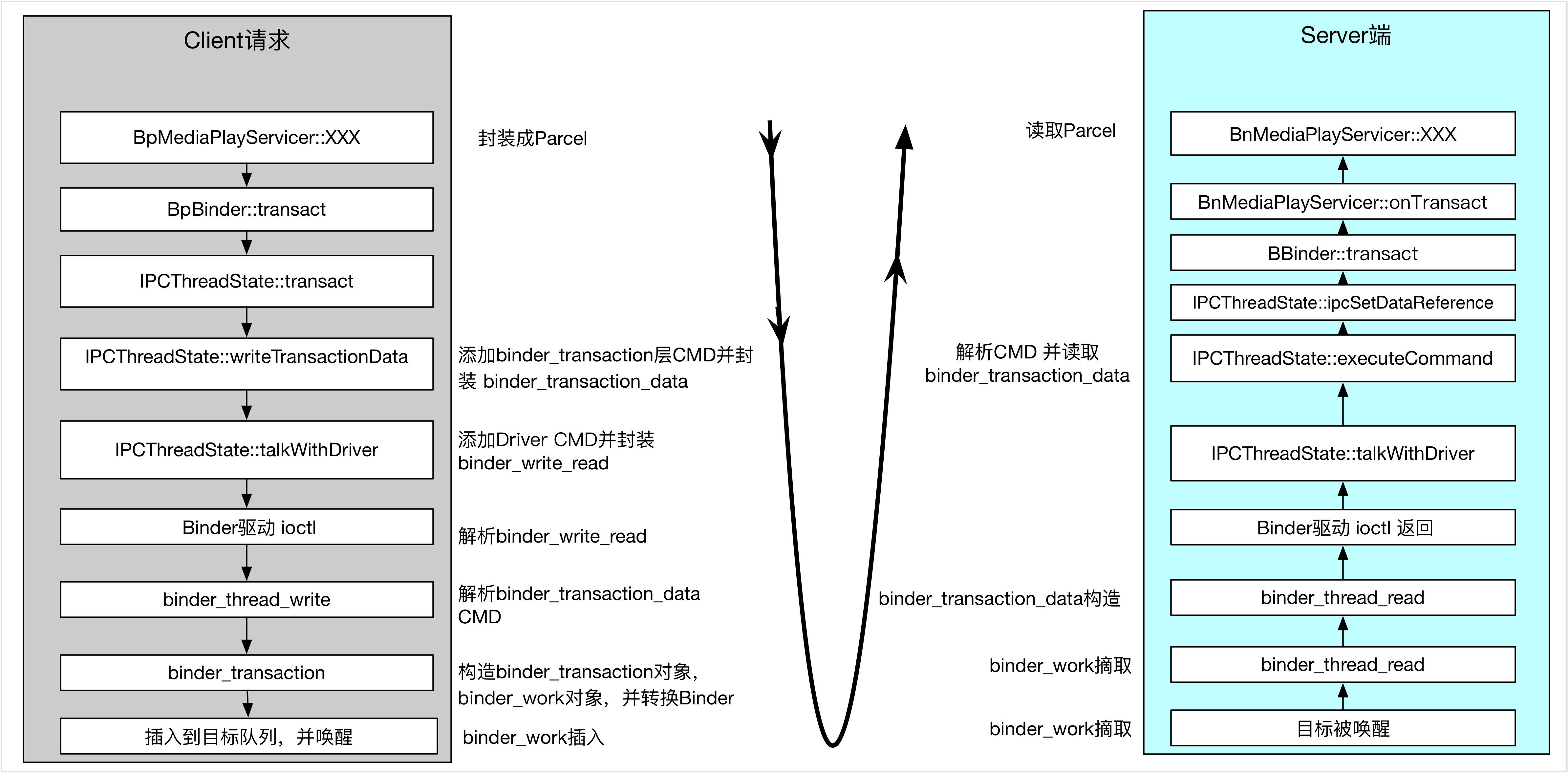

writeStrongBinder结束后,就开始调用status_t err = remote()->tra nsact(ADD_SERVICE_TRANSACTION, data, &reply);,因为这里是BpServiceManager,所以remote()成员函数来自于BpRefBase类,它返回一个BpBinder指针,也就是BpBinder(0)。后面是一连串的调用链:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104// android / platform / frameworks / native / master / . / libs / binder / BpBinder.cpp

status_t BpBinder::transact(uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

...

//IPCThreadState::self() 里面会初始化自己的成员变量mIn,mOut

status_t status = IPCThreadState::self()->transact(mHandle, code, data, reply, flags);

...

}

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

flags |= TF_ACCEPT_FDS;

. . . . . .

// 把data数据整理进内部的mOut包中

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

. . . . . .

if ((flags & TF_ONE_WAY) == 0)

{

. . . . . .

if (reply)

{

err = waitForResponse(reply);

}

else

{

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

. . . . . .

}

else

{

//oneway,则不需要等待reply的场景

err = waitForResponse(NULL, NULL);

}

return err;

}

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

//把数据封装成 binder_transaction_data

binder_transaction_data tr;

tr.target.handle = handle; // handle = 0

tr.code = code; // code = ADD_SERVICE_TRANSACTION

tr.flags = binderFlags;

// data为记录Media服务信息的Parcel对象

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(size_t);

tr.data.ptr.offsets = data.ipcObjects();

}

....

mOut.writeInt32(cmd); //cmd = BC_TRANSACTION

mOut.write(&tr, sizeof(tr)); //写入 binder_transaction_data 数据

return NO_ERROR;

}

//在waitForResponse过程, 首先回复之前的ADD_SERVICE信息,执行BR_TRANSACTION_COMPLETE;另外,在目标进程收到事务后,处理BR_TRANSACTION事务。 然后发送给当前进程,再执行BR_REPLY命令。

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

...

// talkWithDriver()内部会完成跨进程事务

if ((err=talkWithDriver()) < NO_ERROR) break;

...

// 事务的回复信息被记录在mIn中,所以需要进一步分析这个

cmd = mIn.readInt32();

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

...

}

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

//把mOut数据和mIn的数据处理后构造一个binder_write_read对象

binder_write_read bwr;

bwr.write_size = outAvail;

bwr.write_buffer = (long unsigned int)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

//接收数据缓冲区信息的填充。如果以后收到数据,就直接填在mIn中了。

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (long unsigned int)mIn.data();

} else {

bwr.read_size = 0;

}

...

do {

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

// 这里设置收到的回复数据

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

} while (err == -EINTR); //当被中断,则继续执行

...

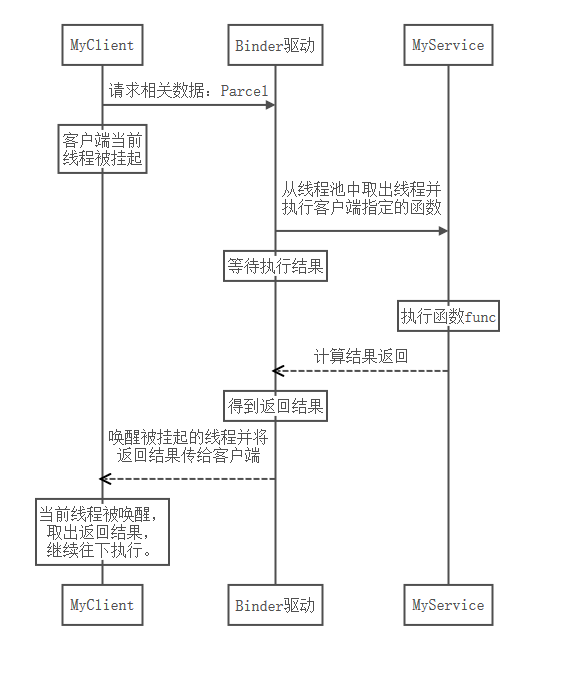

}短暂的总结一下流程:

这里开始是 binder驱动内容,其实只需要知道这个

talkWithDriver()结果是 mIn 得到数据,waitForResponse()中reply->ipcSetDataReferenc()设置返回数据即可。///驱动这里我也好多没看懂。。。

1 | //内核驱动程序, |

这里到了服务进程了

ServiceManager在 `binder_loop`的`ioctl()`函数中由于`binder_thread_read()`的`wait_event_interruptible_exclusive()`而进入阻塞状态,在这里被驱动通过 wake_up_interruptible 唤醒了,:1 | void binder_loop(struct binder_state *bs, binder_handler func) |

被唤醒后,会在binder_thread_read()中读取binder传过来的数据,赋值到本地局部变量struct binder_transaction_data tr中,接着把tr的内容拷贝到用户传进来的缓冲区 ,返回后再把binder_ioctl()中的本地变量struct binder_write_read bwr的内容拷贝回到用户传进来的缓冲区中,最后从binder_ioctl()函数返回(有任务就break跳出循环返回了),接着执行binder_parse():

1 | int binder_parse(struct binder_state *bs, struct binder_io *bio, |

这个函数传入的函数指针是svcmgr_handler,因此会进入到

1 | int svcmgr_handler(struct binder_state *bs, |

最后,执行binder_send_reply()函数,再次进入到ioctl()函数中,把数据再次封装成一个事务,唤醒 MediaPlayerService去处理,

对于ServiceManager来说,到这里就结束了。IServiceManager::addService执行完毕.

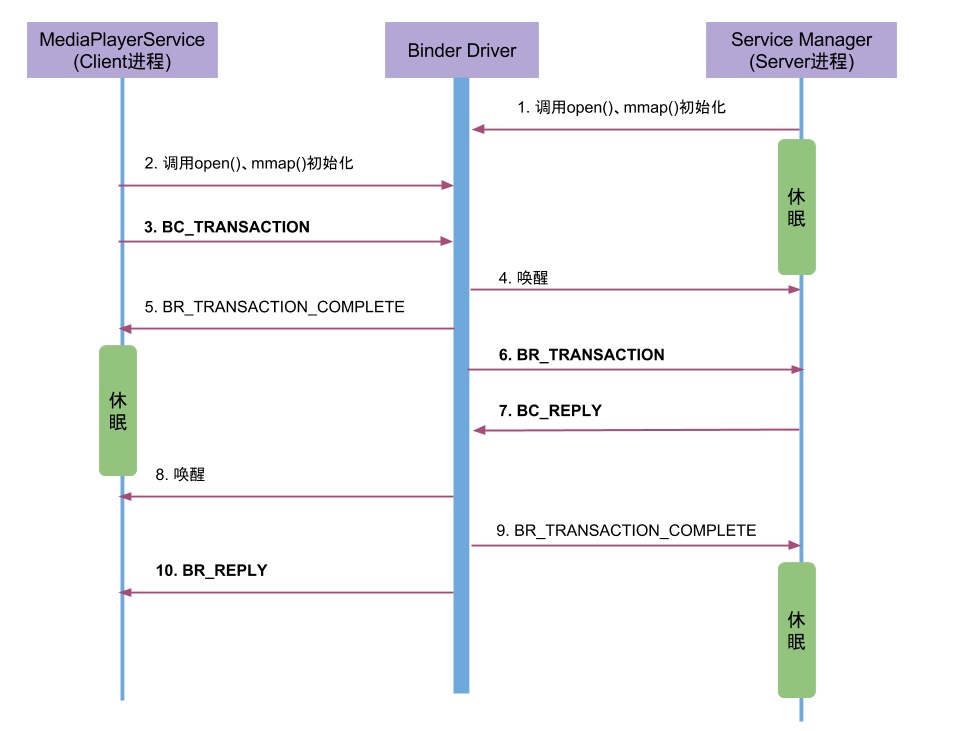

数据流向:

BBinder+name 序列化到 Parcel里,

然后加上 target= 0和code=ADD_SERVICE 转成 binder_transation_data 对象,

加上cmd = BC_TRANSACTION 写入到IPC_ThreadState的mOut 里面

mOut和mIn 构造一个 binder_write_read 对象, 发送给 binder 驱动

binder 驱动拷贝这个数据到内核,构造一个 binder_transaction_data 发送给服务进程的todo队列,同时向用户进程回复BR_COMPLETE(为什么要拷贝,之前传指针不是很方便吗?因为那里是客户进程,内核可以访问,但是服务进程访问不了,指针没法跨进程使用。)

服务进程构造 binder_transaction_data 和 BC_REPLY 回复给 binder 驱动

上面描述了 service_manager 的 binder_loop 循环,对于其他的服务来说,binder线程的启动是通过下面两句来启动的:

1

2ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52void IPCThreadState::joinThreadPool(bool isMain)

{

mOut.writeInt32(isMain ? BC_ENTER_LOOPER : BC_REGISTER_LOOPER);

set_sched_policy(mMyThreadId, SP_FOREGROUND);

status_t result;

do {

processPendingDerefs(); //处理对象引用

result = getAndExecuteCommand();//获取并执行命令

if (result < NO_ERROR && result != TIMED_OUT && result != -ECONNREFUSED && result != -EBADF) {

ALOGE("getAndExecuteCommand(fd=%d) returned unexpected error %d, aborting",

mProcess->mDriverFD, result);

abort();

}

//对于binder非主线程不再使用,则退出

if(result == TIMED_OUT && !isMain) {

break;

}

} while (result != -ECONNREFUSED && result != -EBADF);

mOut.writeInt32(BC_EXIT_LOOPER);

talkWithDriver(false);

}

status_t IPCThreadState::getAndExecuteCommand()

{

status_t result;

int32_t cmd;

result = talkWithDriver(); //该Binder Driver进行交互

if (result >= NO_ERROR) {

size_t IN = mIn.dataAvail();

if (IN < sizeof(int32_t)) return result;

cmd = mIn.readInt32(); //读取命令

pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mExecutingThreadsCount++;

pthread_mutex_unlock(&mProcess->mThreadCountLock);

result = executeCommand(cmd);

pthread_mutex_lock(&mProcess->mThreadCountLock);

mProcess->mExecutingThreadsCount--;

pthread_cond_broadcast(&mProcess->mThreadCountDecrement);

pthread_mutex_unlock(&mProcess->mThreadCountLock);

set_sched_policy(mMyThreadId, SP_FOREGROUND);

}

return result;

}上文中的分析可以知道,

talkWithDriver()会把数据封装成一个事务发送给服务端 ,服务端处理请求后会把数据封装成事务返回,talkWithDriver()则解析返回的事务得到数据并返回。因此这里返回数据后接着调用executeCommand()1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111status_t IPCThreadState::executeCommand(int32_t cmd)

{

BBinder* obj;

RefBase::weakref_type* refs;

status_t result = NO_ERROR;

switch (cmd) {

......

case BR_TRANSACTION:

{

binder_transaction_data tr;

result = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(result == NO_ERROR,

"Not enough command data for brTRANSACTION");

if (result != NO_ERROR) break;

//Record the fact that we're in a binder call.

mIPCThreadStateBase->pushCurrentState(

IPCThreadStateBase::CallState::BINDER);

Parcel buffer;

buffer.ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), freeBuffer, this);

const pid_t origPid = mCallingPid;

const uid_t origUid = mCallingUid;

const int32_t origStrictModePolicy = mStrictModePolicy;

const int32_t origTransactionBinderFlags = mLastTransactionBinderFlags;

mCallingPid = tr.sender_pid;

mCallingUid = tr.sender_euid;

mLastTransactionBinderFlags = tr.flags;

//ALOGI(">>>> TRANSACT from pid %d uid %d\n", mCallingPid, mCallingUid);

Parcel reply;

status_t error;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_TRANSACTION thr " << (void*)pthread_self()

<< " / obj " << tr.target.ptr << " / code "

<< TypeCode(tr.code) << ": " << indent << buffer

<< dedent << endl

<< "Data addr = "

<< reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer)

<< ", offsets addr="

<< reinterpret_cast<const size_t*>(tr.data.ptr.offsets) << endl;

}

if (tr.target.ptr) {

// We only have a weak reference on the target object, so we must first try to

// safely acquire a strong reference before doing anything else with it.

if (reinterpret_cast<RefBase::weakref_type*>(

tr.target.ptr)->attemptIncStrong(this)) {

error = reinterpret_cast<BBinder*>(tr.cookie)->transact(tr.code, buffer,

&reply, tr.flags);

reinterpret_cast<BBinder*>(tr.cookie)->decStrong(this);

} else {

error = UNKNOWN_TRANSACTION;

}

} else {

error = the_context_object->transact(tr.code, buffer, &reply, tr.flags);

}

mIPCThreadStateBase->popCurrentState();

//ALOGI("<<<< TRANSACT from pid %d restore pid %d uid %d\n",

// mCallingPid, origPid, origUid);

if ((tr.flags & TF_ONE_WAY) == 0) {

LOG_ONEWAY("Sending reply to %d!", mCallingPid);

if (error < NO_ERROR) reply.setError(error);

sendReply(reply, 0);

} else {

LOG_ONEWAY("NOT sending reply to %d!", mCallingPid);

}

mCallingPid = origPid;

mCallingUid = origUid;

mStrictModePolicy = origStrictModePolicy;

mLastTransactionBinderFlags = origTransactionBinderFlags;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_REPLY thr " << (void*)pthread_self() << " / obj "

<< tr.target.ptr << ": " << indent << reply << dedent << endl;

}

}

break;

.......

}

if (result != NO_ERROR) {

mLastError = result;

}

return result;

}

status_t BBinder::transact(uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

data.setDataPosition(0);

status_t err = NO_ERROR;

switch (code) {

case PING_TRANSACTION:

reply->writeInt32(pingBinder());

break;

default:

err = onTransact(code, data, reply, flags);

break;

}

if (reply != NULL) {

reply->setDataPosition(0);

}

return err;

}因为服务端继承了BBinder,因此这里实际上会调用服务端的

onTransact(),也就是MediaPlayerService的onTransact()函数,执行相应的动作。这样子就从客户端跨进程调用到了服务端。

Native端 客户端获取服务端接口的过程:

在上一节里面分析了BpServiceManager.addService(),这一节来看 getService(string)

1 | class BpServiceManager : public BpInterface<IServiceManager> |

这里的调用链大部分都在上一节说过了。因此这里简单描述下:

1 | remote()->transact(CHECK_SERVICE_TRANSACTION, data, &reply); |

最终得到一个BpMediaPlayerService对象

总结一下(c++部分)

- 获取 ServiceManager 远程接口的时候,不需要跨进程,因为ServiceManger的binder实体固定句柄为0,只需要new BpBinder(0) 就可以得到binder引用,拿到 BpServieManager

- 获取普通服务的远程接口的时候,需要跨进程调用,因为需要通过 BpServieManager 向ServiceManger请求,ServiceManager会返回名字对应的服务的Binder实体的句柄给驱动程序,驱动程序读出来后序列化后返回给客户端,客户端拿到以后就可以new BpBinder(handle)拿到普通服务的远程代理对象了。

- 调用 ServiceManager 的功能的时候(比如addservice,getService), ServiceManager 是在binder_loop函数中解析 驱动传过来的数据后,直接处理,然后返回数据给驱动程序。 而 调用普通服务的功能的时候,拿到 驱动传过来的数据后会调用到BBinder的虚函数去处理

- IPCThreadState类借助ProcessState类来负责与Binder驱动程序交

- 需要注意的是,比如我们在addService中传入一个BBinder对象,会通过

writeStrongBinder()序列化成一个flat_binder_object后传给驱动,而在getService()的时候,驱动返回的也是一个包含服务端句柄的flat_binder_object对象,这个对象会被readStrongBinder()函数解析成一个BpBinder对象返回给调用方。

流程总结:

参考:

《深入理解Android 卷1》

《深入理解Android 卷3》

Android进程间通信(IPC)机制Binder简要介绍和学习计划

深入分析Android Binder 驱动

红茶一杯话Binder

深入理解Binder原理

Parcel数据传输过程,简要分析Binder流程